Parameterized Tests Using Pytest for Hardware in the Loop Testing

In Configuring a Hardware in the Loop Project, we reviewed the hardware concept in the loop testing using the Pytest framework. In that article, we examined the high-level concepts needed to create a basic test framework and structure. In this article, we will be reviewing the Pytest framework in-depth, specifically parameterized tests. At the end of this article, the reader will understand the difference between individual tests and parameterized tests. The reader will also leverage the parameterization concept to create high-functioning, powerful test executives with very few lines of code.

Basic Pytest Testing

The very traditional form of using the Pytest framework for testing, as demonstrated in Configuring a Hardware in the Loop Project, is to create a “test” function for each test you would like to run. In that demonstration, a function was made to set a register (setBit) and clear a register (clearBit). Should the user decide to write a series of functions that set and clear several registers, this process could become time-consuming and tedious. The default approach that I have seen many engineers take is to loop through a specific function.

For example:

# Expected values to be found in read registers

register_values = [12, 8, 9, 22]

# Pytest to check register contents

def test_checkRegisters():

# Iterate over expected values

# Enumerate creates an index (e.g. i) to iterate over

for reg in enumerate(register_values):

# Note: get_reg_data() is defined elsewhere

assert (get_reg_data(reg[0]) == reg[1])

This style of test function generation will undoubtedly work, and all test cases will be covered. The issue, however, is that this test suite covered within test_checkRegisters() will count as only one single test. This means that your test report will register a single test execution. If all iterations within the loop pass or fail, then the aggregate ends up the same (i.e., reporting a single pass or fail), and that would be acceptable. What happens if only one of the iterations fails the assertion? The whole test will report as a failure. In some cases (i.e., very large test suites), this might be intended, but in an example like this, where these are the only tests being executed, it would be less than ideal.

Parameterized Pytest Testing

Using parameterization within the Pytest framework, we get to reuse the same function repeatedly. Still, we can also generate unique test cases that then get reported by the test executive. In the example above, we can use parameterization to create a unique test for each register check. This means that if all but one register check fails, we end up with three passing tests and one failing test (versus one complete test failure). Let us take a trivial example comparing “traditional” tests against parameterized tests. The following code demonstrates five individual tests:

def test_Test1():

return True

def test_Test2():

return True

def test_Test3():

return True

def test_Test4():

return True

def test_Test5():

return True

The following code demonstrates a single function that will be parameterized ten times (thus resulting in a report of 10 unique tests):

def test_params(testItem):

assert testItem == True

We create an additional file called conftest.py that acts as our configurator. Within that file, we create the following functions:

# Generate test stimulus

def create_tests():

# return test stimulus as a list of size 10

# For example: return [True] * 10

# Parameterize tests here

def pytest_generate_tests(metafunc):

metafunc.parametrize('testItem', create_tests())

Note two deviations from the traditional parameterization scheme:

- I’ve created a function that generates the test stimulus (not implemented here). This allows you to read in parameter files, grab stimulus from a database, or generate sophisticated bit-true models on the fly.

- I am not using the “@pytest.mark.parametrize” bulletin decorator to create my test stimulus within a function. If I were to use the decorator, then my test stimulus would need to be defined statically.

This set of deviations is critical concerning hardware in the loop testing. It is very common to have a single suite of tests run against many different device variations (i.e., using design variants for your PCB stuffing options). We may have configuration schemes embedded within ID chips or pull-up resistors that give us information on what tests should be run and what type of data we should be expecting to receive back. This can only happen if we allow our environment first to run a few functions to gather the necessary data before running our suite of tests (albeit this can be worked around within the test file itself).

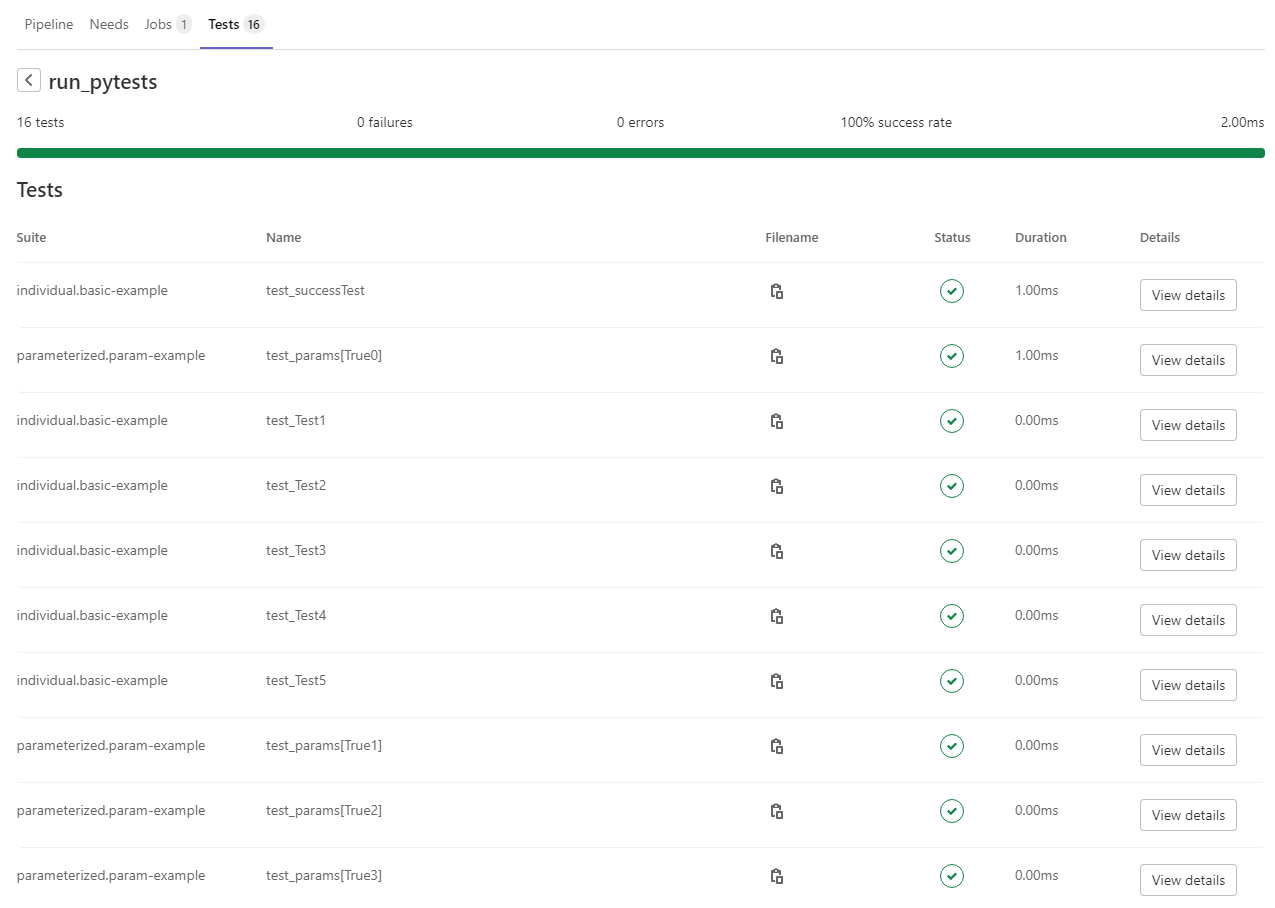

If we now observe the test report generated by Pytest (and then parsed by Gitlab), you will notice two suites of tests: individual tests and parameterized tests.

Figure 1: Test Results using Gitlab CI

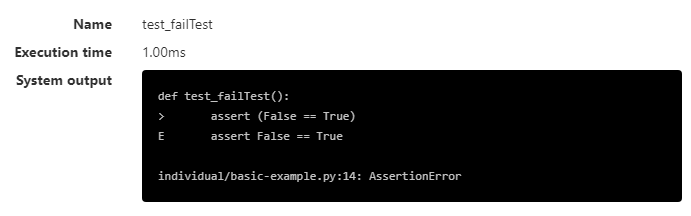

The individual tests show up with their unique names, such as test_successTest or test_Test1. The parameterized tests also show up as individual tests but use a subset naming scheme such as test_param[True0] or test_param[True1] (which were generic names that I gave to the tests). If only one of those parameterized tests fails, then a single test will failure will be reported like so:

Figure 2: Failed Test Results using Gitlab CI

This should give you the necessary information to understand why that test failed to allow you to resolve it as quickly as possible (versus reviewing every iteration of a single test).

Conclusion

In this article, we reviewed the concept of individual tests versus parameterized tests using the Pytest framework. We looked at the differences and why/when you would want to use parameterized tests over the traditional, single-function style. Additionally, we reviewed an example to demonstrate the differences and explained why it was necessary to deviate from the standard bulletin decorator. The reader should now be able to put together many reusable and extensible tests using very few lines of code. The user is also invited to view all the source code, and CI builds hosted on Gitlab as a reference.